"Should I approve this invoice?"

This deceptively simple question launched Medius started their AI journey in 2023. Their customers wanted faster, better approval decisions - and AI seemed like the perfect solution to prevent fraud.

The team ran workshops, built sophisticated AI prompts to analyze payment patterns, and created an advanced system to flag potential fraud.

The AI worked for pilot customers, but there was one problem: their users didn't use it.

"If you are approving a lot of invoices, you don't usually need help—you have the patterns, you know your suppliers," explains Khaireddine (Khairi) Amamou, Head of Growth at Medius. "Most of our users have developed incredibly efficient workflows. They use shortcuts, they've internalized the patterns. They're already experts at what AI was trying to help them with."

The Expert's Paradox

This revealed a crucial insight about AI adoption: sometimes the most sophisticated AI solutions aren't needed where users have already developed deep expertise. Medius's AP professionals had spent years developing mental models for fraud detection that were already highly efficient.

But while their power users had mastered invoice approvals, a different opportunity emerged. The team discovered high-friction areas where users hadn't developed the same level of expertise:

- Translation of foreign invoices

- Quick invoice summaries for unfamiliar situations

- The back-and-forth of supplier communications

This last one proved particularly interesting. AP teams were spending up to 25% of their time on supplier emails - a task that hadn't been optimized through years of expertise. Here, AI could provide immediate, measurable value.

Finding AI's Sweet Spot

The discovery led Medius to a crucial realization: successful AI adoption wasn't just about building sophisticated technology - it was about finding the right problems where AI could genuinely augment human workflows.

Making AI Features Work: UX Patterns That Drive Adoption

With this insight about where AI could add real value, Medius evolved their approach to focus on making AI capabilities visible at moments of actual user friction. Here's how they did it:

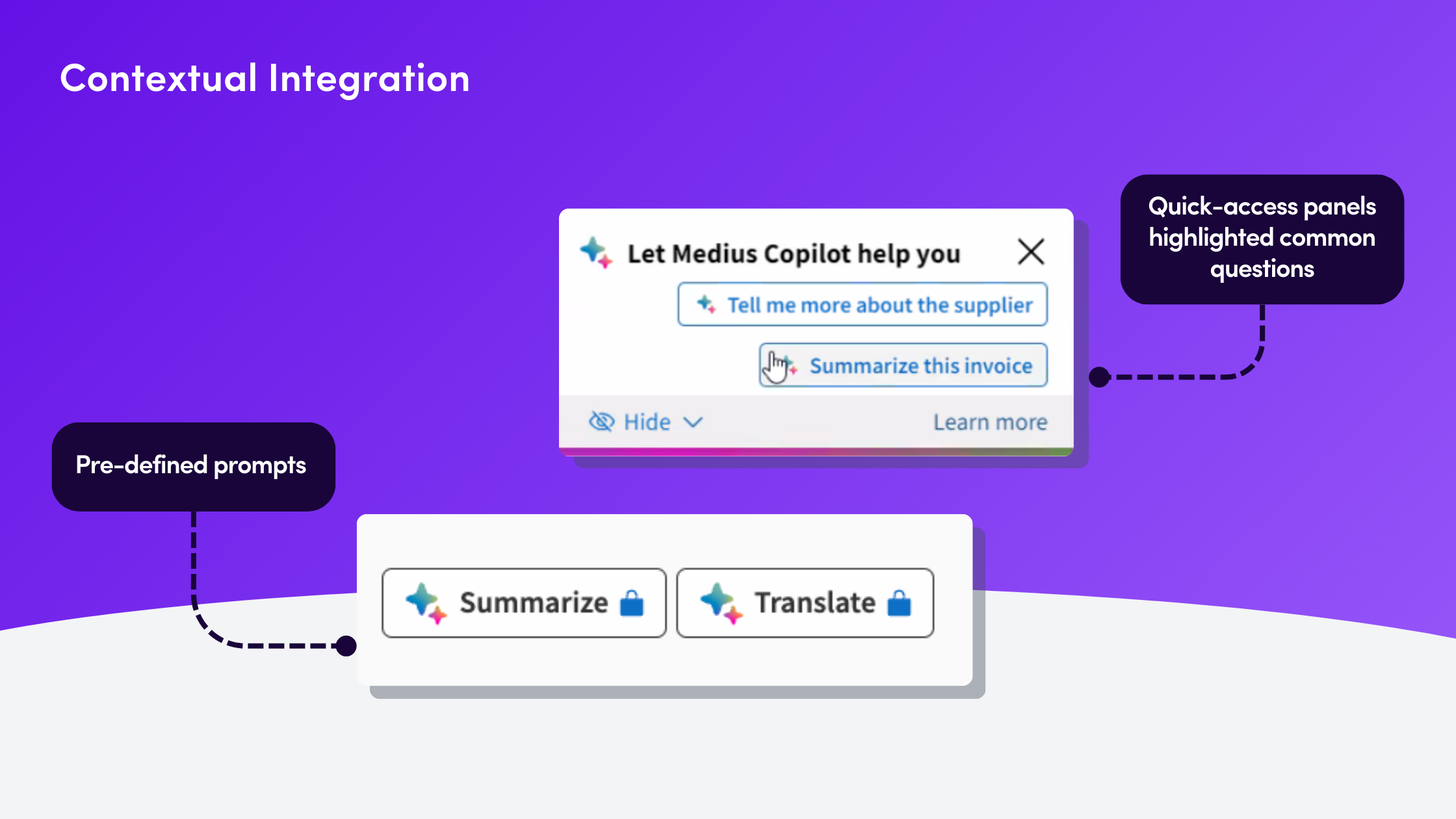

Contextual Integration

Instead of building a general chatbot and hoping users would find it, Medius embedded AI capabilities directly into specific user workflows where expertise was lacking:

- Translation buttons appeared precisely when foreign language invoices showed up

- Pre-defined prompts like "Summarize this invoice" surfaced when users encountered unfamiliar scenario

- "Ask Copilot" emerged after detecting user hesitation on complex decisions

- Quick-access panels highlighted common questions that typically required back-and-forth communication

"It tripled the usage when [the AI] became visible and we started to see people clicking on the predefined questions," explains Khairi. "We stopped trying to compete with our users' expertise and started augmenting their workflows where they actually needed support."

Progressive Discovery

Rather than overwhelming users with AI capabilities, Medius crafted a journey that introduced features as users encountered relevant problems:

- Initial onboarding highlighted key capabilities in context

- Help center integration connected existing questions to AI solutions

- Tooltips revealed AI capabilities at relevant moments

- Example prompts rotated based on user context

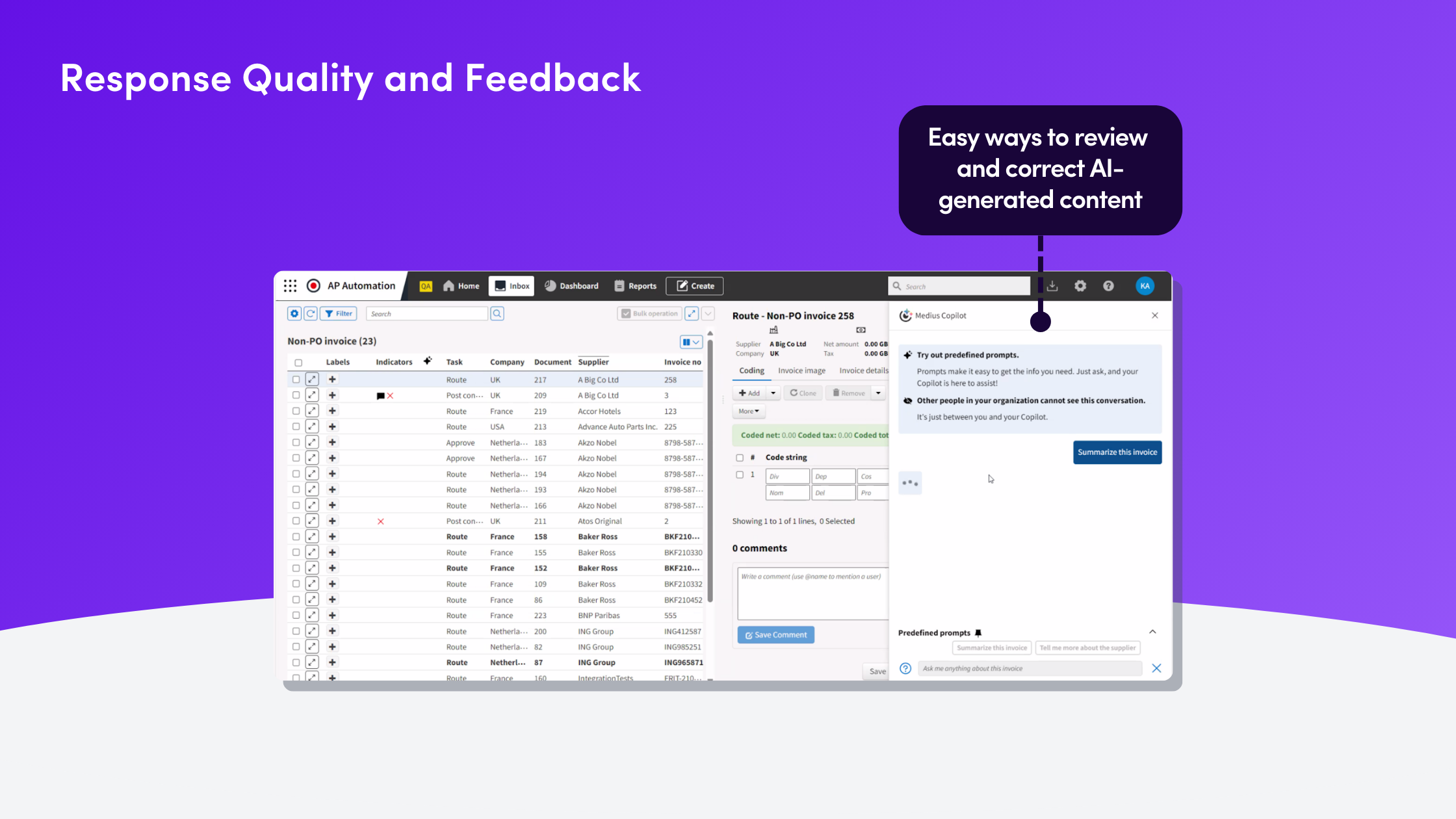

Response Quality and Feedback

For areas where AI was taking on new tasks, building trust became crucial:

- Clear feedback mechanisms on every AI response

- Follow-up suggestions that acknowledged user expertise

- Visual indicators during processing

- Easy ways to review and correct AI-generated content

The key insight? AI adoption wasn't about pushing sophisticated technology everywhere - it was about understanding where users actually needed augmentation and making those capabilities discoverable at the right moment.

The "Should a Robot Do This?" Test

This journey led Medius to develop a clear framework for evaluating where AI could add the most value. Rather than assuming AI should enhance every workflow, they developed three key criteria for identifying the right opportunities:

- Is the task repetitive but not yet optimized by user expertise?

- Is the success measurable in concrete terms?

- Does AI reduce human effort while maintaining user control?

"Supplier conversation is selling massively... The AI value is quite tangible, it's easy to sell. You are telling the AP teams, you are spending at least one day from your five work days, 22% statistically answering supplier questions." With the AI integration, AP teams could automate these responses while giving teams full control to review before sending. The value proposition was clear: save a day a week.

The insight was powerful: AI adoption soared when it tackled high-friction, time-consuming tasks accurately. Areas where teams had already developed expertise and efficient workflows - like invoice approvals - weren't necessarily the best candidates for AI.

The Metrics Challenge

This insight also solved another thorny problem: measuring AI's impact. "We've had many debates about measuring return on investment," Khairi shares. "With the approval use case, measuring time saved in the approval cycle proved nearly impossible - our users were already highly efficient."

In contrast, measuring impact on supplier communications was straightforward:

- Reduction in response time to supplier queries

- Number of automated responses sent

- Time saved on email composition

- User satisfaction with AI-generated responses

Beyond the API: Building for Targeted AI

While building AI features can seem straightforward - just hit an API, right? - Medius discovered the real challenges lay in creating infrastructure that could support their targeted approach to AI implementation.

Two critical capabilities emerged:

Prompt Engineering:

- Prompt routing to match user context and expertise level

- Function calling to minimize unnecessary AI involvement

- Sophisticated prompt chains for complex queries

- RAG (Retrieval Augmented Generation) to ground responses in accurate data

QA for AI:

- Systematic testing of responses across different user scenarios

- Monitoring for response quality and consistency

- Specific test cases for each workflow context

- Performance metrics tied to actual user value

"Today, building chatbots is easy," Khairi notes. "The real differentiator is whether you can scale them effectively while maintaining quality. As prompts get more complex and users get more creative with their questions, you need robust systems to support them."

A Framework for AI Product Development

Medius's journey revealed a clear framework for product teams considering AI features. Before starting, ask yourself:

- Are you solving a problem your users haven't already mastered?

- Is the problem specific and measurable?

- Do you have the resources for proper context management and QA?

- Can you clearly identify where user expertise ends and AI assistance begins?

Direct quote from Khairi: "Don't get f***ing adrenaline just because it's AI. Product development and problem solving, it's the same at the end of the day."

The Art of Finding Where AI Fits

Medius's journey reveals a counterintuitive truth about AI product development: sometimes the most sophisticated problems aren't the ones that need AI solutions. Their power users had already mastered complex approval decisions through years of experience. The real opportunity lay in the seemingly simpler, but high-friction tasks that hadn't benefited from years of workflow optimization.

This discovery transformed their approach to AI development. Instead of trying to replicate expert judgment, they focused on augmenting human workflows where expertise hadn't yet developed. The result? A suite of AI capabilities that didn't compete with user knowledge, but enhanced it at exactly the right moments.

A few lessons for PMs starting looking to launch AI features:

- Don't assume complex problems need AI solutions

- Look for high-friction areas where users haven't developed expertise

- Build infrastructure that can support targeted, contextual AI assistance

- Measure success where it's actually measurable

.png)